Comparing Learning Mechanisms: Neural Networks vs. Human Children

Can machines really learn as humans do? Large language models come close - soaking up data and recognizing patterns. But children have an edge - curiosity, emotion, social intelligence. As AI advances, understanding these cognitive parallels is key to the future.

Can machines really learn as humans do? Large language models come close - soaking up data and recognizing patterns. But children have an edge - curiosity, emotion, social intelligence. As AI advances, understanding these cognitive parallels is key to the future.

Imagine a world where machines learn just like our children do. It sounds like science fiction, but with the advent of Large Language Models (LLMs), we're closer than ever to understanding the parallels between artificial and human learning. Let's embark on a journey to explore these intriguing similarities and differences.

Picture this: An LLM, after merely glancing at a dataset, begins to recognize patterns, almost as if it's memorizing a song after hearing it just once. Now, think of a toddler. They hear a new word, perhaps "giraffe," and after a couple of mentions, they point excitedly at a picture of the long-necked animal in a storybook. This incredible ability of children, known as "one-shot learning," is eerily mirrored in the world of LLMs.

LLMs are like sponges, soaking up specific examples. But sometimes, they get so engrossed in the details that they miss the bigger picture. On the other hand, children have this innate knack for generalization. Show them a few different dogs, and soon, they're calling every four-legged furry creature a "doggy." It's as if they're looking beyond the specifics, capturing the essence of what makes a dog a dog.

An LLM is like a diligent student, always focused always processing data. But what it lacks is the spark of curiosity. Now, think of children. Their endless "whys" and "hows," their fascination with the world around them, and their insatiable hunger for knowledge. It's this curiosity that drives them to learn, to explore, and to understand the world in all its complexity.

LLMs come with a vast reservoir of knowledge, ready to be fine-tuned for any task. It's like having a toolbox with every tool imaginable. In comparison, children might seem like they have a smaller toolbox, but their ability to use a single tool in multiple ways is astounding. The same problem-solving skills they use to build a tower of blocks can be applied to solve a math problem.

For LLMs, learning is a solitary journey confined to datasets and algorithms. But for children, learning is a social adventure. They learn by watching, by imitating, and, most importantly, by interacting. Every playdate, every storytime, every interaction is a learning opportunity, teaching them about the world and their place in it.

LLMs learn through trial and error, constantly tweaking their approach to get closer to the right answer. It's a systematic, calculated process. Children, too, learn from their mistakes, but there's an emotional aspect to it. The disappointment of a toppled block tower, the joy of getting a math sum right after a few attempts - it's this emotional roller-coaster that drives their learning journey.

Dive into the architecture of an LLM, and you'll find layers upon layers meticulously designed. It's a marvel of engineering. But children? They come with their own pre-wired structures and an innate understanding of certain concepts. Some say it's nature's way of giving them a head start in the learning race.

As we stand at the crossroads of technology and human cognition, the parallels between LLMs and children offer a glimpse into the future. A future where machines might not just learn from data but perhaps, just perhaps, with a touch of human-like curiosity and wonder.

Bibliography

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. (link)

- Li, F. F., Fergus, R., & Perona, P. (2006). One-shot learning of object categories. IEEE transactions on pattern analysis and machine intelligence, 28(4), 594-611. (link)

- Zhang, C., Bengio, S., Hardt, M., Recht, B., & Vinyals, O. (2017). Understanding deep learning requires rethinking generalization. arXiv preprint arXiv:1611.03530. (link)

- Gopnik, A. (2012). Scientific thinking in young children: Theoretical advances, empirical research, and policy implications. Science, 337(6102), 1623-1627. (link)

- Yosinski, J., Clune, J., Bengio, Y., & Lipson, H. (2014). How transferable are features in deep neural networks?. In Advances in neural information processing systems (pp. 3320-3328). (link)

- Tomasello, M. (2019). Becoming human: A theory of ontogeny. Harvard University Press. (link)

- Caruana, R. (1997). Multitask learning. Machine learning, 28(1), 41-75. (link)

- Chomsky, N. (1965). Aspects of the theory of syntax (Vol. 11). MIT press.

- Lake, B. M., Ullman, T. D., Tenenbaum, J. B., & Gershman, S. J. (2017). Building machines that learn and think like people. Behavioral and brain sciences, 40. (link)

#Artificial Intelligence #Machine Learning #Large Language Models (LLMs) #Human Learning #Cognitive Parallels #Children's Learning #Curiosity# Emotion #Social Intelligence #Learning Process #Natural Language Processing #Neural Networks #Trial and Error Learning #Emotional Learning #Human Cognition #Technology and Learning #Learning Models #Future of AI

Immerse yourself in the game-changing ideas of OpenExO.

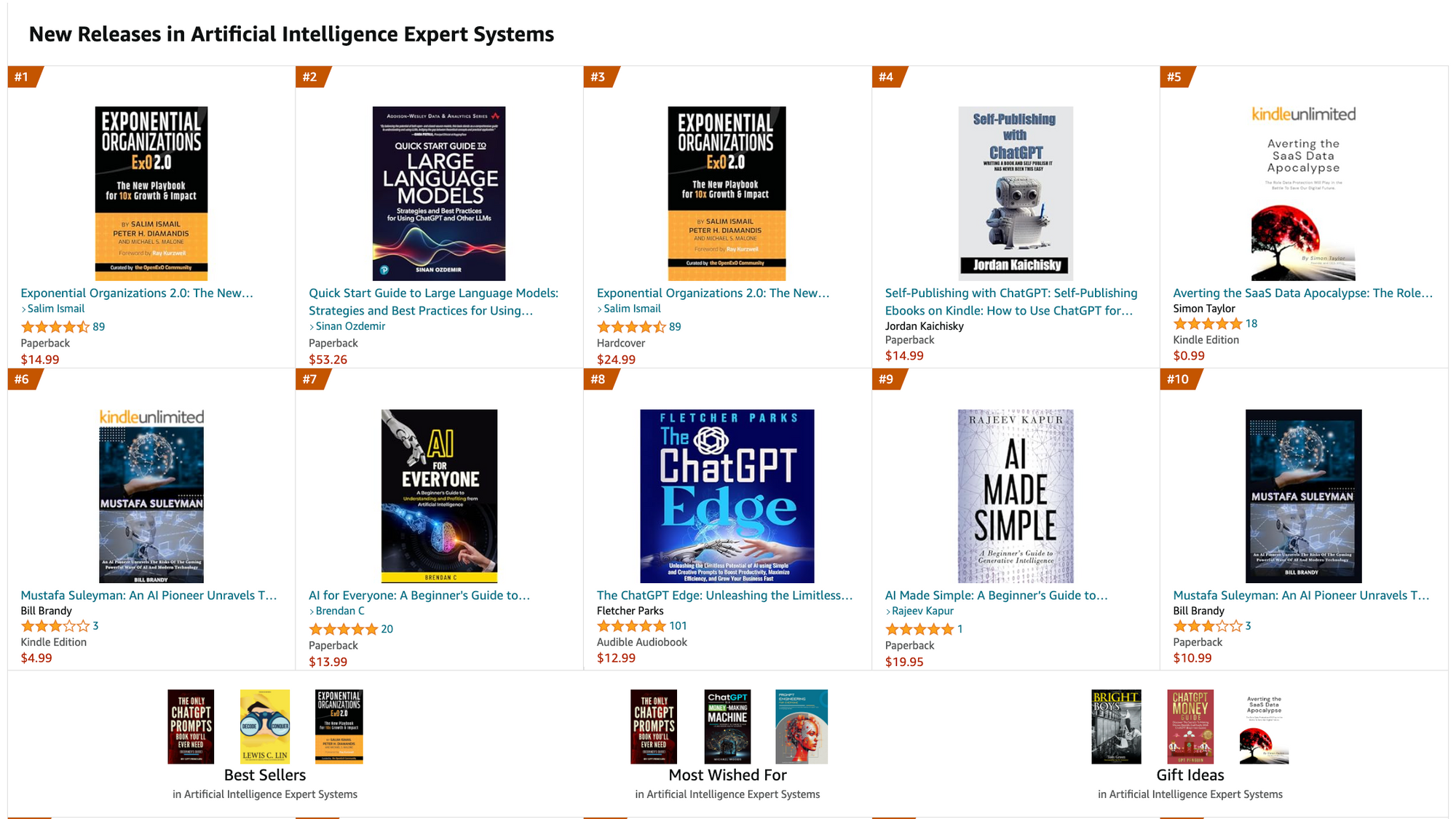

Begin your journey here 🎟️ExOPass & 📚Exponential Organizations 2.0

Join the ExO Community. Break norms, reshape boundaries, and be part of pioneering a brighter, more prosperous future.

ExO Insight Newsletter

Join the newsletter to receive the latest updates in your inbox.