Speaking Silently: the Neuroscience of Sign Language

Indeed, languages incorporate the knowledge and the history of a culture, while the function of cognition is to translate and represent this wisdom (“internal world”) toward the outside (“external world”) to interact with the environment and life events.

"Change your language and you change your thoughts." (Karl Albrecht)

Unlike animals, humans come to a life empowered with a remarkable ability: speech. However, in contrast to the common misunderstanding in equating speech with language, since the 1960s, several studies in cognitive neuroscience demonstrate that sign language is independent, and complexly organized around linguistic levels of meaning and grammar. How does sign language differ from spoken language? And do these languages share common features in the brain? How does the brain process sign or spoken language?

A shared brain region to process sign and spoken language

Recently published in the journal Human Brain Mapping [1], researchers at the Max Planck Institute for Human Cognitive and Brain Sciences (MPI CBS) in Leipzig (Germany) investigated which areas of the brain are responsible for processing sign language and how large the overlap across brain regions is when compared to hearing individuals while processing spoken language. Hence, in a meta-study, they pooled data from experiments conducted across the world on sign language processing.“A meta-study allows us to get an overall picture of the neural basis of sign language. So, for the first time, we were able to statistically and robustly identify the brain regions that were involved in sign language processing across all studies,” says Emiliano Zaccarella, group leader in the Department of Neuropsychology.

Moreover, across studies, it was found that Broca’s area, located in the frontal brain of the left hemisphere, was highly involved in processing sign language. Not surprisingly, this area of the brain is already well-known to be active when processing spoken language and is mostly involved in language production, meaning, and grammar. When comparing these findings with previous studies, the observed results from the Leipzig-based research confirmed an overlap in activation between spoken and sign language, precisely in Broca’s brain region. In addition, it was observed an activation over the right frontal region, which would be the opposite site of Broca’s area (left side of the brain). Indeed, a non-spoken language such as sign language would process non-linguistic aspects that are mostly spatial or social information. And what does this mean? Moving hands, the body, or the face - characterizing the form of signs - can be perceived similarly by deaf or hearing individuals. However, only deaf individuals would also activate the language network in the left hemisphere, including Broca’s area. Thus, gestures would be processed and perceived as linguistic and not just simple movements (e.g., this would be the case in the brain of a hearing individual).

Therefore, this research proves that the Broca’s area, located in the left human brain's hemisphere, plays a central role and central station in the language network. Moreover, this region works together with other networks and can process spoken written language and even more abstract forms of language. “The brain is therefore specialized in language per se, not in speaking,” explains Patrick C. Trettenbrein, first author of the published research [1] and doctoral student at the MPI CBS. Nevertheless, the need to discover even more is the core of science. Therefore, the research team aims to explore the different parts of Broca’s area and whether these regions might be specialized in either the meaning or the grammar underlying sign language and processed by deaf individuals, similar to what happens in hearing individuals.

What is sign language?

Lots of studies on the topic should be addressed to Stokoe (1960), who first supported the idea that signed language is like any other. Along with his colleagues, they designed the dictionary of the American sign language (ASL) (Stokoe, Casterline, & Croneberg, 1965) in which arbitrary shapes of hands, palm orientations, locations, movements, spatial relations, and orders between signs would define what is a signed language; a language that satisfy several linguistic characteristics and precise criteria (Lillo-Martin,1997; Siple, 1997), from the phonology, morphology, syntax, semantics to the pragmatics. Although ASL is one of the most studied signed languages, others have also been further investigated and involved in a few studies in cognitive neuroscience. Learning and acquiring ALS structure in terms of handshapes would match the same difficulty that hearing individuals encounter when acquiring phonemes. It was found that ALS syntax would follow similar principles of spoken grammar, besides a structured spatial grammar (Lillo-Martin, 1997). Therefore, the acquisition of ALS requires the same sequence as it would happen for any spoken language and with a similar proposition (Studdert-Kennedy, 1983) [2].

The neural basis of sign language

The majority of our knowledge regarding the neural basis of linguistic communication relates to the investigation of those mechanisms underlying spoken language. However, human language is not restricted to the oral-aural modality. Hence, the exploration of the neural processes of sign language would enhance our learning of what we know today about language and which characteristics generally belong to this cognitive domain or are exclusive to a language that is spoken and heard. Neuroimaging studies on patients with brain lesions show that sign and spoken language share similar neural pathways, and therefore both involve the activation of the left-lateralized perisylvian network. In addition, studies demonstrate how these languages process information is different, and thus how communication and language mechanisms function in hearing and deaf individuals based on modality-specific patterns, as well as, modality-specific language impairments, fostering the understanding regarding the neural basis of cross-linguistic differences [3,4].

Recent advances regarding the neural substrate of sign language

The initial investigation on sign language, covering a period of 40 years (1960-2000), supported the evidence of common neural mechanisms between sign and spoken languages, relating this process to the activity of the left hemisphere of the brain, and as demonstrated by neuroimaging studies (e.g., fMRI and PET) and evidence from patients. However, recent research demonstrates controversial results, showing how some aspects of sign language are unique to itself (e.g., iconicity, fingerspelling, linguistic facial expressions). Therefore, language production and comprehension should be investigated separately to better explore differences or similarities between sign and speech, and particularly which neural pathways are supporting the mechanisms underlying these two languages [5].

How sign language combines and decodes phonological features in production and comprehension? How relevant linguistic parietal regions are functionally integrated with frontal and temporal areas? In this regard, a recent review aimed at summarising the most recent studies over the last 15-20 years by answering this research question to inspire and foster future studies [5].

Despite similarities around the perisylvian cortex, how production and comprehension processes function in sign language are still under investigation and indeed, the neural process underlying that region may act differently between these two language types. Hence, the posterior superior temporal cortex would be differently activated when trying to comprehend signs or words. Thus, in response to sign language, this region would engage the representation of lexical semantics. In contrast, in response to spoken language it would map the auditory-vocal phonological representation related to lexical semantics. Another example would be the left inferior parietal cortex responsible for phonological processes in both language modalities, which would have different functioning according to the type of phonological units. Indeed, as investigated by Evans et al. (2019), even though an observed activation in the left posterior middle temporal gyrus (pMTG) when processing both signs and words (comprehension), a direct link between signs and words in this region was unclear, although an overlap was shown regarding a cross-linguistic representation of semantic categories (e.g fruits, animals, transport) over the left posterior middle/inferior temporal gyrus. Overall, the study provided support and evidence that representing words or signs would activate different processes from auditory-vocal or visual-manual phonological representations [5].

Moreover, the review discusses how the left inferior frontal gyrus (IFG) and the left supramarginal gyrus (SMG) activate in response to sign production and comprehension (Okada et al., 2016). Concerns apart, the role of this dorsal frontoparietal circuit would be to sustain lexical selection and the integration of both semantic and phonological information. In addition, also the posterior middle temporal gyrus (MTG) seems to be involved in the process of production and comprehension (Levelt and Indefrey, 2004), besides the retrieval and access to conceptually-driven lexical information.

Another region, called the visual word form area (VWFA), was also a bridge between fingerspelling and orthographic representations. Likewise, in support of production and comprehension processes, the superior parietal cortex would execute these functions separately (e.g., planning and monitoring the articulation during production or the spatial analysis and classification of constructs during comprehension). Understanding the nature of syntactic processing is still under investigation, and much needs to be explored, particularly how production and comprehension function in sign language (Matchin and Hickok, 2020). Similarly, unclear is also how neural networks, and thus functional connectivity between brain regions, interact to support sign language mechanisms. In this regard, future research specializing in building neurobiological models should also address neural computation processes of comprehension and production in sign language and how they differ or equal those models underlying spoken language (Flinker et al., 2019) [5].

Furthermore, very little is known about neurodevelopmental processes related to sign language and whether the evolution of these mechanisms matches or not with what is observed in spoken language (Payne et al., 2019), but also in association with the effect of early language deprivation on brain structures and related functioning (Hall, 2017; Romeo et al., 2018; Meek, 2020). In conclusion to the findings of this review and in contrast to the “classical model” relating language functioning to Broca’s (production) and Wernicke’s (comprehension), considered underspecified, sign language would have unique features and processes of visual-manual languages, that can be addressed into a specific neurobiological model that differs from what has been seen in spoken language [5].

To know more about sign language and the brain here is another extensive review.

More than 130 sign languages

Little is known regarding the neurobiology of sign language, however, what we know is that there are more than 130 recognized sign languages and the American Sign Language (ASL) has been estimated the fourth most common in the US. For a round trip across different signed languages worldwide read here.

Which is the mystery behind sign languages and how do they work in the brain? Different and similar mechanisms between signed and verbal communication have been discussed by Mairéad MacSweeney, director of the University College London Deafness, Cognition, and Language Research Centre, who reports several facts about the brain. A good way to start understanding this is to view signed communication as a form of human language that develops naturally when a group of deaf individuals has to communicate and interact. Sign language shares the same features and complexity as hearing individuals encounter with verbal language. And as we can see with the multiplicity of spoken languages, also signed languages vary across countries [6].

As previously described, brain scans from deaf or hearing people show similar neural patterns, thus these regions devoted to linguistic processes seem to treat the information coming from the eyes or ears equally. However, hearing people that are listening to a speech would activate the auditory cortex, whereas deaf individuals watching signs activate the region of the brain responsible for analyzing visual motion. In this frame, sign language would express spatial relationships differently and in a simpler way than the counterpart, spoken language. “The cup is on the table.” A hearing speaker would use spoken words to communicate that. Differently, a signer would communicate those concepts physically by using gestures (e.g., using hands to physically show the action of a cup placed on the table). Therefore, this process would activate a spatial representation, generated in the left parietal lobe, and this process would be enhanced in deaf compared to hearing people. Despite the observed overlap in the strength of activation across brain regions in these two types of languages, the group of scientists associated with Mairéad MacSweeney is focused on identifying the specific pattern of activation occurring in these regions to shed light on the representation used in these areas. If previous studies demonstrated a common brain region (“where”), what is still unclear is “how” these regions respond specifically to signed or spoken languages [6].

How language shapes cognition

Have you ever thought about how language shapes our cognition? Does language influence our way of thinking? Do different languages shape our thoughts differently? If yes, how?

In contrast to previous theories, a novel approach, based on a mathematical model, was proposed supporting the hypothesis that language and cognition are separate processes, although highly interconnected. This Dual model, related to Arbib’s “language prewired brain,” articulates itself according to the mirror neuron system. The model was proposed by Perlovsky (2011) [7] showing how several neuroimaging data on cognition can be modeled while explaining the interaction between cognition and language, and also how different grammars shape different cultures (e.g., English or Arabic). Indeed, languages incorporate the knowledge and the history of a culture, while the function of cognition is to translate and represent this wisdom (“internal world”) toward the outside (“external world”) to interact with the environment and life events. While language is acquired early in life (and through stages from sounds, words, phrases, to abstract concepts), cognition is developed afterward and mostly from experience, but always mediated by the role of language [7].

Given the interplay between language and cognition, how does this mechanism manifest in sign language?

The developmental and the cross-linguistic approaches are the two major theories that tried to explain the interaction between language and cognition. With the former, the investigation focuses on spatial cognition and how this skill is acquired even before spatial language in children. With the latter, spatial cognition is compared among different languages and how language shapes how spatial relations are encoded across different speakers. However, both approaches present limitations, and therefore alternative hypotheses have also been proposed. Nevertheless, it is still unclear which is the nature and the mechanisms underlying the relationship between language and cognition [8].

To better understand how language acquisition is differentiated by cognitive development and cultural influences, a study on deaf individuals of Nicaraguan Sign Language (NSL) was conducted. The study investigated this process in two groups of deaf signers who acquired sign language in Nicaragua during their infancy (first-cohort), or 10 years later but in a complex form (second-cohort). Results demonstrated how the development of spatial cognition strictly depends on the acquisition of specific aspects of spatial language. Indeed, the second cohort, now at the age of 20, relied more on spatial language than those signers belonging to the first cohort now at the age of 30. In addition, signers of the second cohort did show higher performances in the context of spatially guided searches. While left-right spatial relations were correlated with search performances in a condition of disorientation, marking of ground information was correlated with search performance in a rotated array. Thus, the development of spatial cognition is influenced by the acquisition of an enriched language, highlighting the potential causal effect of language over cognition (thoughts). Contrary to previous approaches considering the relation between language and cognition culturally influenced, linguistic effects appear to be determinant, in which spatial language would affect reasoning and space relationships when navigating in complex environments. Hence, language seems to be a medium to support spatial representations and cognitive processing [8].

For more details about the study, read here.

"The limits of my language mean the limits of my world." (Ludwig Wittgenstein)

References:

- Trettenbrein, P. C., Papitto, G., Friederici, A. D., & Zaccarella, E. (2020).The functional neuroanatomy of language without speech: An ALE meta-analysis of sign language. Human Brain Mapping, 42(3), 699–712. DOI: https://doi.org/10.1002/hbm.25254

- Ronnberg, J., Soderfeldt, B., and Risberg, J. (2000). The cognitive neuroscience of signed language. Acta Psychologica, 105, 237-254. DOI:10.1016/S0001-6918(00)00063-9

- MacSweeney, M., Capek, C.M., Campbell, R., and Woll, B. (2008). The signing brain: the neurobiology of sign language. Trends in Cognitive Sciences, 12, 11, pp. 432-440. DOI: https://doi.org/10.1016/j.tics.2008.07.010 (https://www.sciencedirect.com/science/article/pii/S1364661308002192)

- Corina, D.P, and Blau, S. Chapter 36 - Neurobiology of Sign Languages, Editor(s): Gregory Hickok, Steven L. Small, Neurobiology of Language, Academic Press, 2016, Pages 431-443. ISBN 9780124077942. DOI: https://doi.org/10.1016/B978-0-12-407794-2.00036-5. (https://www.sciencedirect.com/science/article/pii/B9780124077942000365)

- Emmorey K (2021) New Perspectives on the Neurobiology of Sign Languages. Front. Commun. 6:748430. doi: 10.3389/fcomm.2021.748430

- Richardson, M.W. (2018). Does the Brain Process Sign Language and Spoken Language Differently? BrainFacts.org. Available at: https://www.brainfacts.org/thinking-sensing-and-behaving/language/2018/does-the-brain-process-sign-language-and-spoken-language-differently-100918 [Accessed on April, 1, 2022]

- Leonid Perlovsky, "Language and Cognition Interaction Neural Mechanisms",Computational Intelligence and Neuroscience, vol. 2011, Article ID 454587, 13 pages,2011. https://doi.org/10.1155/2011/454587

- Pyers, J. E, Shusterman, A., Senghas, A., Spelke, E. S., and Emmorey, K. (2010). Evidence from an emerging sign language reveals that language supports spatial cognition. PNAS, 107 (27) 12116-12120. DOI: https://doi.org/10.1073/pnas.0914044107

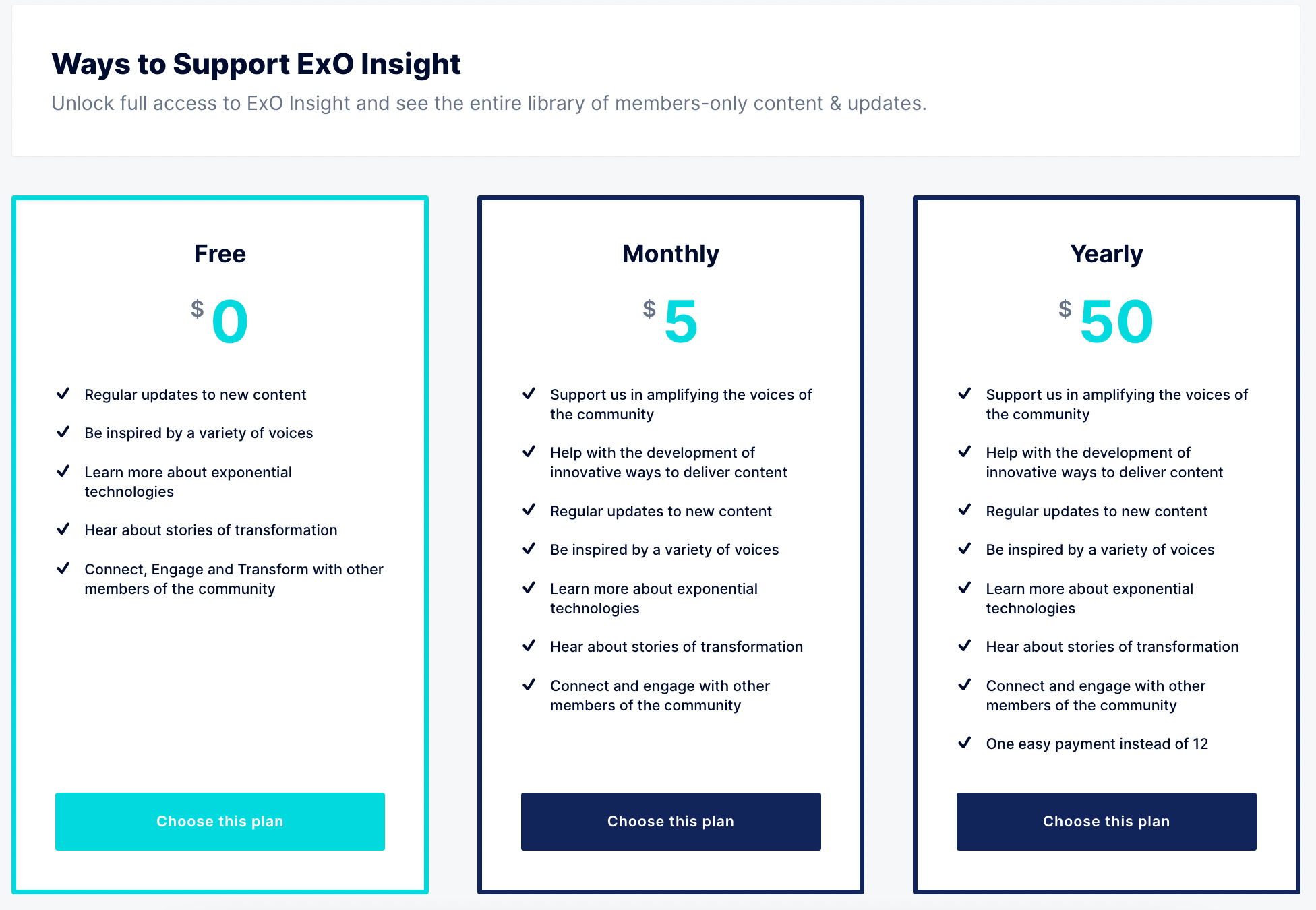

ExO Insight Newsletter

Join the newsletter to receive the latest updates in your inbox.